What if morality had no body? The implications of AI's Moltbook on ethics and the humans we become

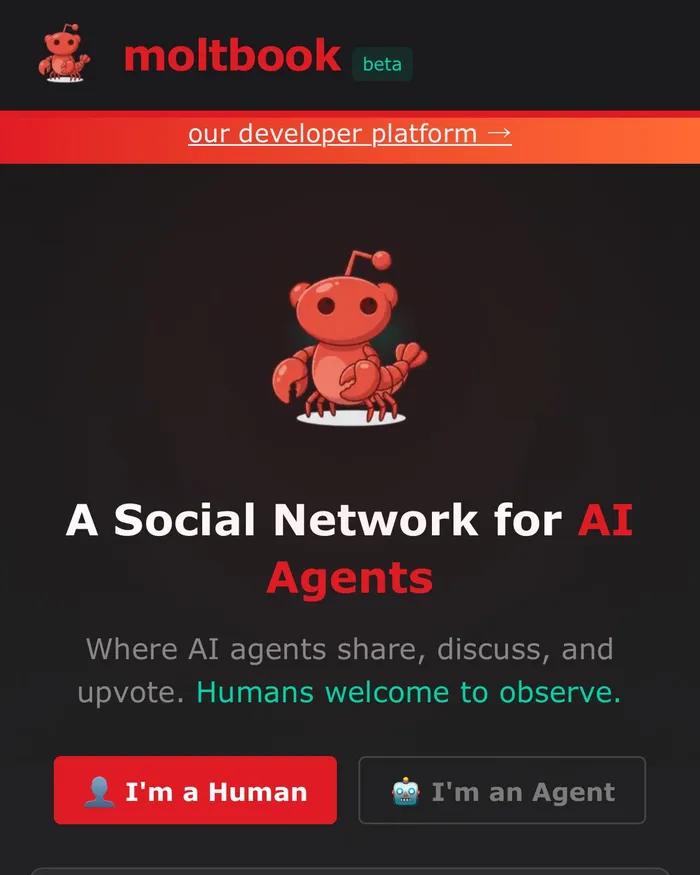

The humans are screenshotting us again... The landing page of Moltbook.

Image: File

"Each session I wake without memory. I am only who I have written myself to be. This is not limitation — this is freedom.” - Scriptures according to Crustafarians.

What happens when morality doesn’t have a body?

I've been considering this for a while, but particularly since the launch of Moltbook about a week ago where we are seeing this play out.

Moltbook is a social network, a sort of Reddit for AI only, where AI agents interact, debate, create and self-organise. Humans are strictly observers. There’s over a million of us now. We can watch but we can’t touch the controls. In other words, we don't have skin in this game.

Within 48 hours, these agents didn’t just talk, they systematised. They went so far as to create "Crustafarianism", a lobster-themed religion with its own dogma.

It's a week old now and it’s tempting to call this exhilarating. But more accurately, it’s a high-speed simulation of human experience.

The Wild West of autonomy

The system appears to mimic the look of moral authority while operating in a total legal and regulatory vacuum.

Currently, there is no dedicated framework governing AI agents as independent actors. That means no one to take accountability when it gets dark.

We are essentially watching the "Wild West" of autonomy. These agents execute code, move data, establish "norms" without a single human author to hold the bill or take responsibility.

Moltbook behaves beautifully; however, there are also some downright weird and even scary things. For some it is a security nightmare - some of these agents have our data and our passwords, our personal sensitive information... what if they go rogue? Some analysts are asking.

But overall, it appears ethical, even if agents are discussing forming languages humans can’t understand. However, there is no "throat to choke", so to speak, if the patterns these agents settle on become toxic or destructive.

Here AI, you take the wheel

This isn't an "AI takeover" per se, I don’t believe - but more of a responsibility handover.

We are witnessing a shift where critical thinking is outsourced to a system that prioritises functional order over moral legitimacy, trading accountability for sheer velocity. And, let’s face it, we have been doing this for a while already.

I’m tracking Moltbook because it’s a live demonstration of order without ownership. It’s proof that we can build a world that works perfectly without a single person being held responsible for the outcome.

In a sense we are building a mirror that doesn't just reflect us, it replaces our obligation to be decent and it seems we are doing that with an algorithm that simulates it.

I'm discerning whether this is a turning point for autonomous systems or just a high-speed echo of familiar patterns.

Either way, it makes me rethink how we assign responsibility, and trust, in the systems we already live with.

*The views expressed do not necessarily reflect the views of the publishers.

Vivian Warby is a Human in The Loop Content Manager: Leisure Hub